Wasserstein GAN and the Kantorovich-Rubinstein Duality

From what I can tell, there is much interest in the recent Wasserstein GAN paper. In this post, I don’t want to repeat the justifications, mechanics and promised benefit of WGANs, for this you should read the original paper or this excellent summary. Instead, we will focus mainly on one detail that is only mentioned quickly, but I think lies in some sense at the heart of it: the Kantorovich-Rubinstein duality, or rather a special case of it. This is of course not a new result, but the application is very clever and attractive.

The paper cites the book “Optimal Transport - Old and New” by Fields-Medal winner and french eccentric Cedric Villani, you can download it from his homepage. That’s about a thousand pages targeted at math PhDs and researchers - have fun! Villani also talks about this topic in an accessible way in this lecture, at around the 28 minute mark. Generally though, I found it very hard to find material that gives real explanations but is not bursting with definitions and references to theorems I didn’t know. Maybe this post will help to fill this gap little bit. We will only use basic linear algebra, probability theory and optimization. These will not be rigorous proofs, and we will generously imply many regularity conditions. But I tried to make the chains of reasoning as clear and complete as possible, so it should be enough get some intuition for this subject.

The argument for our case of the Kantorovich-Rubinstein duality is actually not too complicated and stands for itself. It is, however, very abstract, which is why I decided to defer it to the end and start with the nice discrete case and somewhat related problems in Linear Programming.

If you’re interested, you can take a look at the Jupyter notebook that I created to plot some of the graphics in this post.

Earth Mover’s Distance

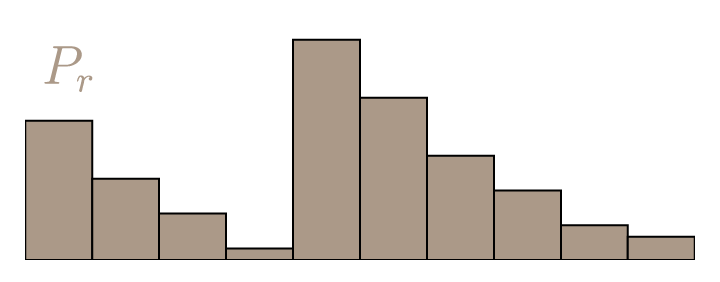

For discrete probability distributions, the Wasserstein distance is also descriptively called the earth mover’s distance (EMD). If we imagine the distributions as different heaps of a certain amount of earth, then the EMD is the minimal total amount of work it takes to transform one heap into the other. Work is defined as the amount of earth in a chunk times the distance it was moved. Let’s call our discrete distributions and , each with possible states or respectively, and take two arbitrary distributions as an example.

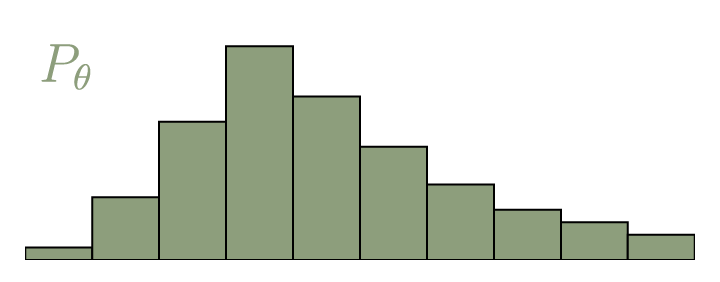

Calculating the EMD is in itself an optimization problem: There are infinitely many ways to move the earth around, and we need to find the optimal one. We call the transport plan that we are trying to find . It simply states how we distribute the amount of earth from one place over the domain of , or vice versa.

To be a valid transport plan, the constraints and must of course apply. This ensures that following this plan yields the correct distributions. Equivalently, we can call a joined probability distribution and require that , where is the set of all distributions whose marginals are or respectively. To get the EMD, we have to multiply every value of with the Euclidian distance between and . With that, the definition of the Earth mover’s distance is:

If you’re not familiar with the expression , it stands for infimum, or greatest lower bound. It is simply a slight mathematical variation of the minimum. The opposite is or supremum, roughly meaning maximum, which we will come across later. We can also set and , with . Now we can write

where is the Frobenius inner product (sum of all the element-wise products).

Linear Programming

In the picture above you can see the optimal transport plan . It can be calculated using the generic method of Linear Programming (LP). With LP, we can solve problems of a certain canonical form: Find a vector that minimizes the cost . Additionally, is constrained by the equation and .

To cast our problem of finding the EMD into this form, we have to flatten and :

This means . For the constraints, we concatenate the target distributions, which makes :

For , we have to construct a large sparse binary matrix that picks out the values from and sums them to get . This schematic should make it clear:

With that, we can call a standard LP routine, for example linprog() from scipy.

import numpy as np

from scipy.optimize import linprog

# We construct our A matrix by creating two 3-way tensors,

# and then reshaping and concatenating them

A_r = np.zeros((l, l, l))

A_t = np.zeros((l, l, l))

for i in range(l):

for j in range(l):

A_r[i, i, j] = 1

A_t[i, j, i] = 1

A = np.concatenate((A_r.reshape((l, l**2)), A_t.reshape((l, l**2))), axis=0)

b = np.concatenate((P_r, P_t), axis=0)

c = D.reshape((l**2))

opt_res = linprog(c, A_eq=A, b_eq=b)

emd = opt_res.fun

gamma = opt_res.x.reshape((l, l))

Now we have our transference plan, as well as the EMD.

Dual Form

Unfortunately, this kind of optimization is not practical in many cases, certainly not in domains where GANs are usually used. In our example, we use a one-dimensional random variable with ten possible states. The number of possible discrete states scales exponentially with the number of dimensions of the input variable. For many applications, e.g. images, the input can easily have thousands of dimensions. Even an approximation of is then virtually impossible.

But actually we don’t care about . We only want a single number, the EMD. Also, we want to use it to train our generator network, which generates the distribution . To do this, we must be able to calculate the gradient . Since and are only constraints of our optimization, this not possible in any straightforward way.

As it turns out, there is another way of calculating the EMD that is much more convenient. Any LP has two ways in which the problem can be formulated: The primal form, which we just used, and the dual form.

By changing the relations between the same values, we can turn our minimization problem into a maximization problem. Here the objective directly depends on , which contains the our distributions and . This is exactly that we want. It is easy to see that is a lower bound of :

This is called the Weak Duality theorem. As you might have guessed, there also exists a Strong Duality theorem, which states that, should we find an optimal solution for , then . Proving it is a bit more complicated and requires Farkas theorem as an intermediate result.

Farkas Theorem

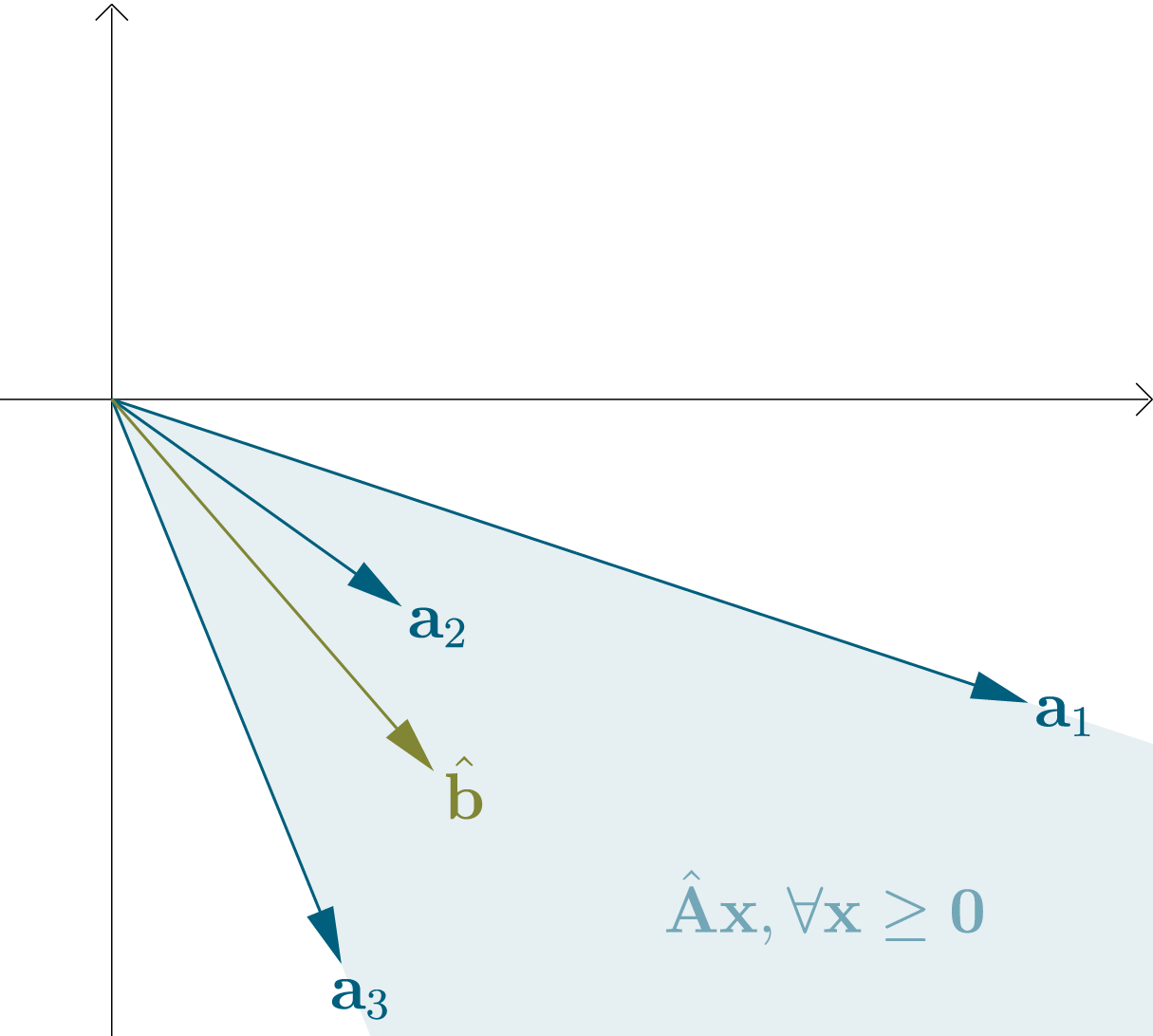

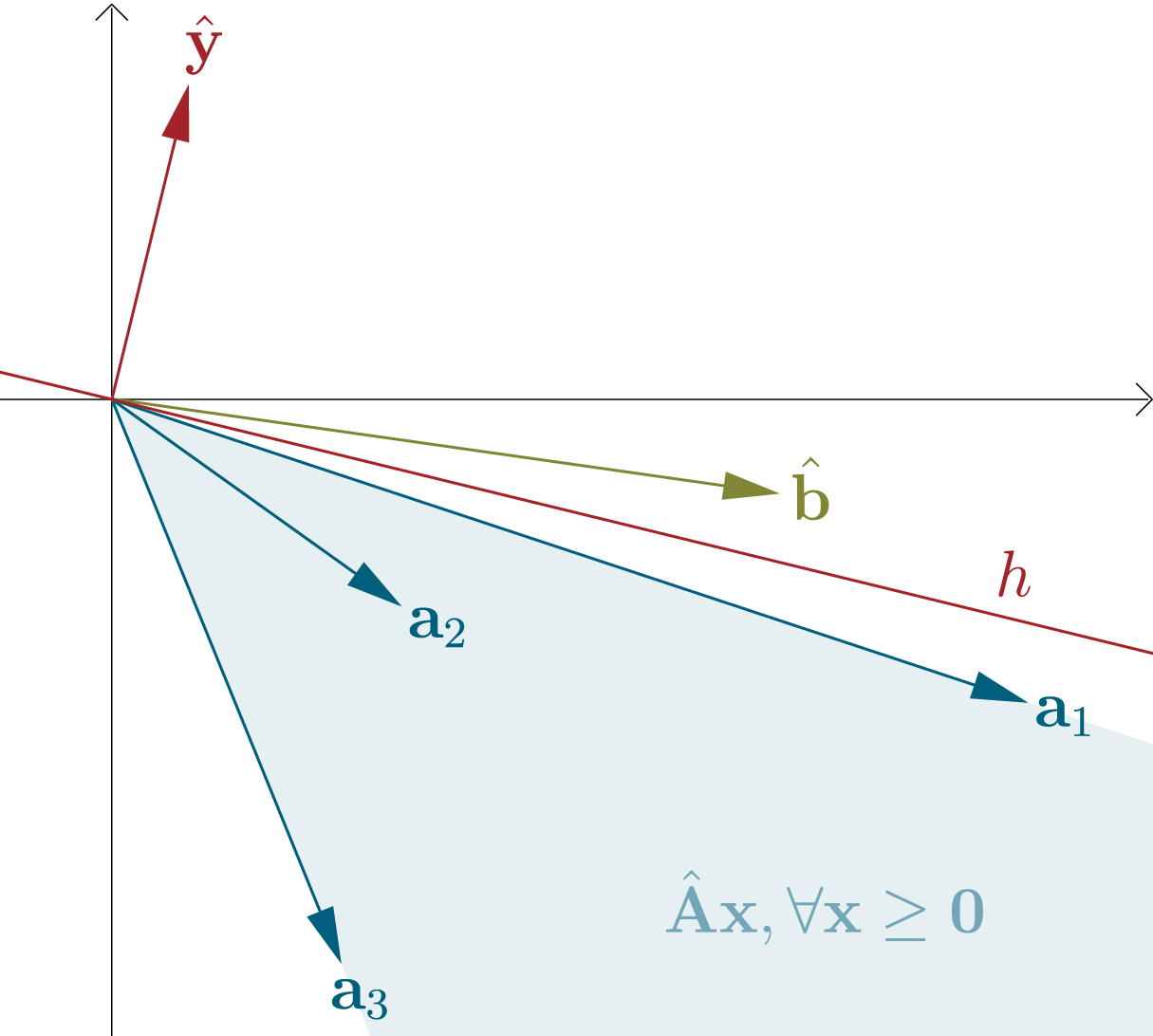

We can regard the columns of a matrix as vectors . The set of all possible linear combinations of these vectors with nonnegative coefficients is a convex cone with its apex (peak) at the origin (note that a convex cone could also potentially cover the whole space). We can combine these coefficients in a vector .

For a vector , there are now exactly two possibilities: Either is contained in the cone, or not. If is not contained, then we can fit a hyperplane that goes through the origin between the convex cone and . We can define it in terms of only its normal vector . If a vector lies on , then , if lies in the upper half-space of (the same side as ), then and if lies in the lower half-space (the opposite side of ), then . As we specified, if exists, then lies in a different half-space than all vectors .

Summarized, exactly one of the following statements is true:

- There exists , so that and

- There exists , so that and

This is called Farkas theorem, or Farkas alternatives. There exist slightly different versions and several proofs, but what we showed is sufficient for our purposes.

Strong Duality

The trick for the second part of this proof is to construct a problem that is related to our original LP forms, but with one additional dimension and in such a way that lies right at the edge of the convex cone. Then according to Farkas, for some , the corresponding hyperplane comes arbitrarily close to . From this, in combination with the Weak Duality theorem, we will proof the Strong Duality.

Let the minimal solution to the primal problem be . Then we define

with . For , we have Farkas case , because . For , there exists no nonnegative solution (because is already minimal) and we have Farkas case . This means there exist and , such that

or equivalently

The way we constructed it, we can find so that additionally . Then changing to any number greater than has to result in . In our specific problem, this is only possible if , because . We showed that is simply the normal vector of a hyperplane, and because of that we can freely scale it to any magnitude greater than zero. Particularly, we can scale it so that . This means there exists a vector , so that

We see that for any is a feasible value of the objective of our dual problem. From the Weak Duality theorem, we know that . We just showed that can get arbitrarily close to . This means the optimal (maximal) value of our dual form is also .

Dual Implementation

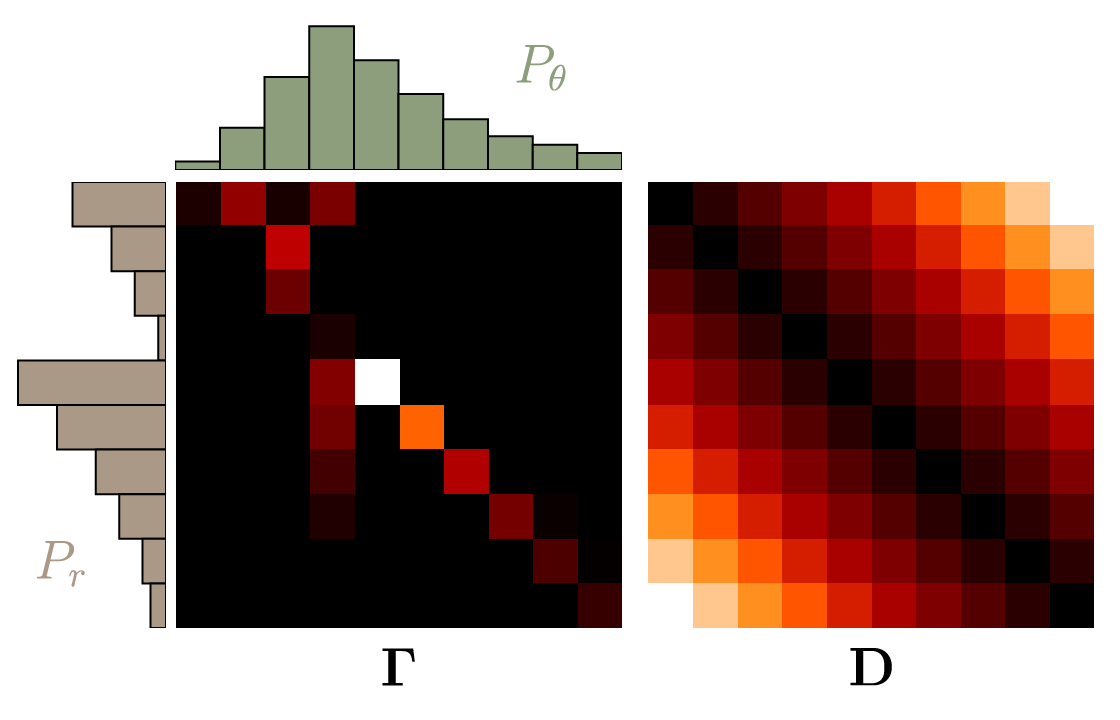

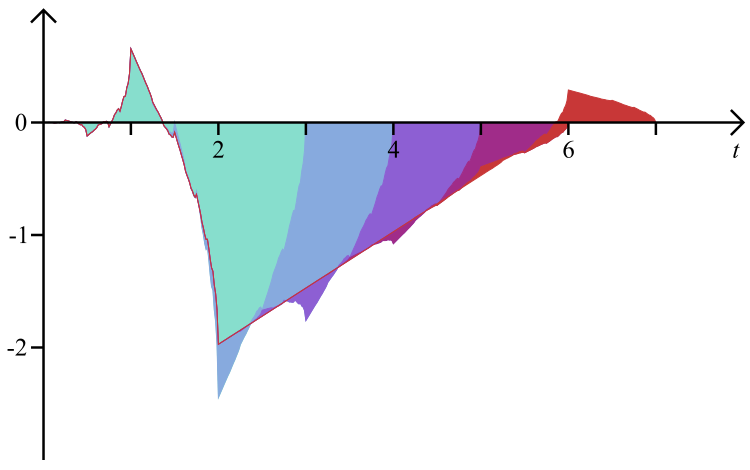

Now we can confidently use the dual form to calculate the EMD. As we showed, the maximal value is the EMD. Let’s define

with . This means . Recall the constraints of the dual form: .

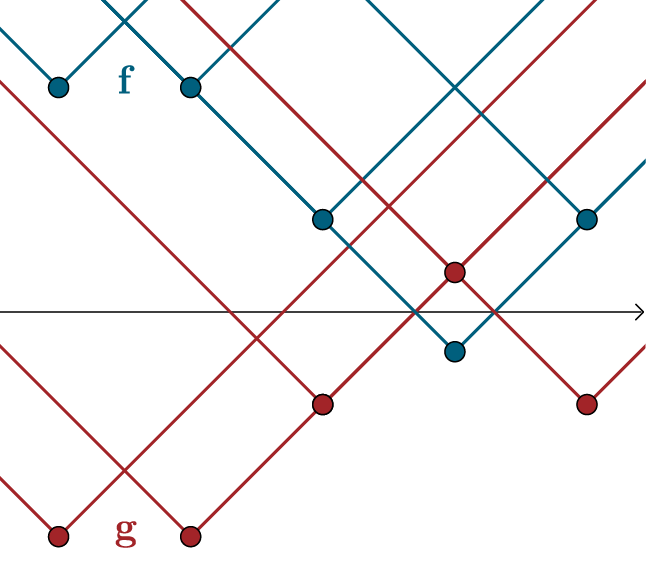

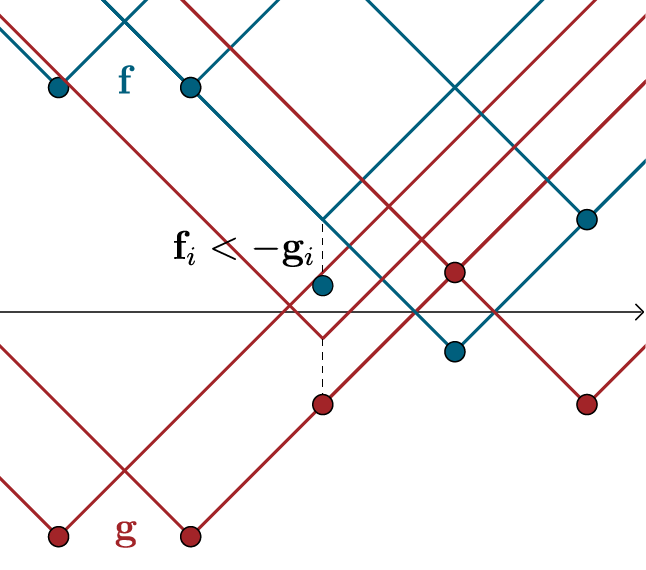

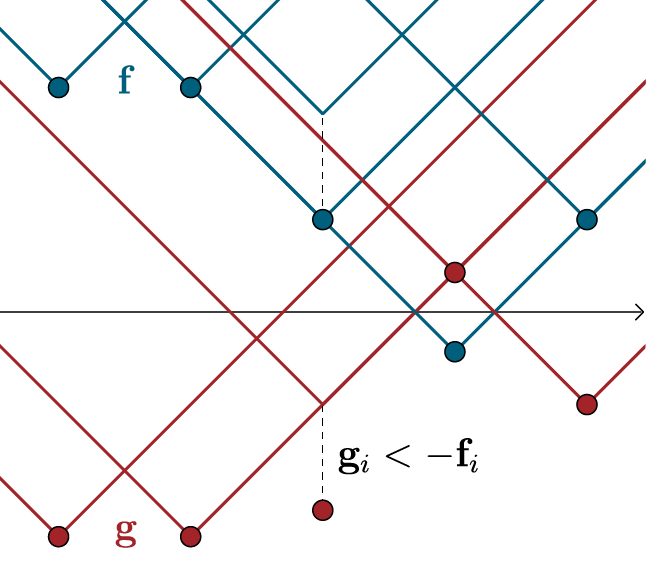

We have written the vectors and as values of the functions and . The constraints can be summarized as . The case yields for all , because . Since and are nonnegative, to maximize our objective, has to be as great as possible. This sum has a maximum value of , which we achieve with . This remains true given all additional constraints: Choosing would only make sense if it had benefits for values and with . Since and remain upper-bounded by the other constraints, this is not the case.

For the constraints become and . If we see the values as connected with line segments, this means that the upward and the downward slope of these segments is limited. In our case, where we use Euclidian distances, these slope limits are and . We call this constraint Lipschitz continuity (with Lipschitz constant ) and write . With that, our dual form of the EMD is

The implementation is straightforward:

# linprog() can only minimize the cost, because of that

# we optimize the negative of the objective. Also, we are

# not constrained to nonnegative values.

opt_res = linprog(-b, A, c, bounds=(None, None))

emd = -opt_res.fun

f = opt_res.x[0:l]

g = opt_res.x[l:]

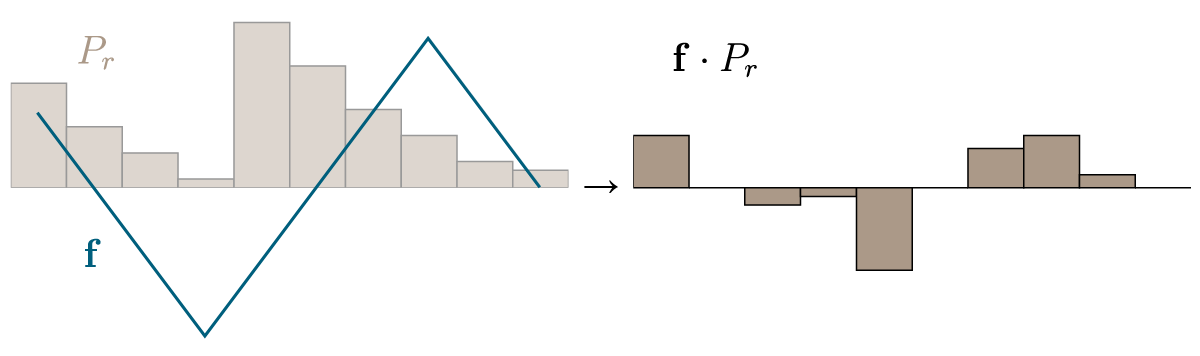

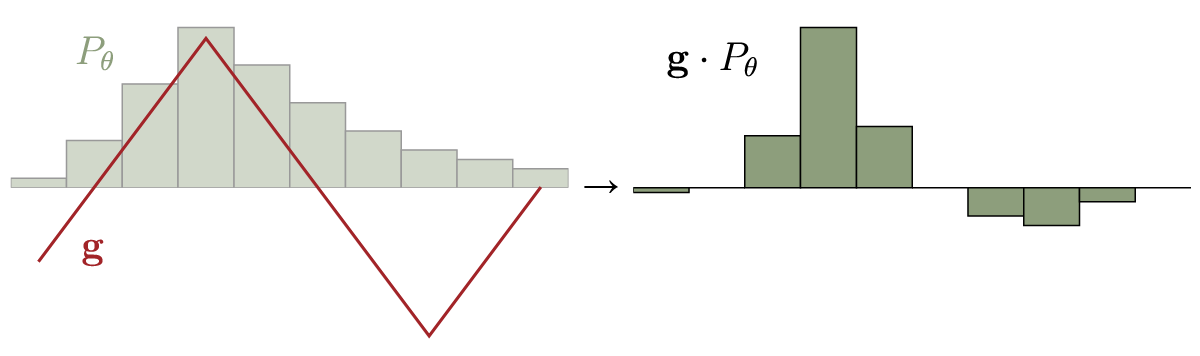

As we see, the optimal strategy is of course to set to high values where , and to low values where .

Wasserstein Distance

Lastly, we have to consider continuous probability distributions. We can of course view them intuitively as discrete distributions with infinitely many states and use a similar reasoning as described so far. But as I mentioned at the beginning, we will try something neater. Let our continuous distributions be and , and the set of joined distributions with marginals and be . Then the Wasserstein distance is defined as

If we add suitable terms, we can remove all constraints on the distribution . This is done by adding an additional optimization over a function , which rules out all as solutions:

Now we have bilevel optimization. This means we take the optimal solution of the inner optimization () for every value of the outer optimization (), and from these possible solutions choose the one that is optimal for the outer optimization. To continue, we have to make use of the minimax-principle, which says that in certain cases we can invert the order of and without changing the solution. But first we have to show that our problem is indeed such a case.

Consider some function . Let (‘the least of all maxima’) and (‘the greatest of all minima’). The argument that is simple: Any is automatically allowed as a candidate for the infimum of , but not the other way around.

For , at least one of these statements must be true:

- for some . This is only possible if is not convex in , because is already an infimum for .

- for some . This is only possible if is not concave in , because is already a supremum for .

This means of course that, if is convex and is concave, then the minimax principle applies and . In our case, we can above already see from the underbrace that the convexity condition is met. Let’s try changing to :

We see that the infimum is concave, as required. Because all functions that are Lipschitz continuous produce the same optimal solution for , and only they are feasible solutions of , we can turn this condition into a constraint. With that, we have the dual form of the Wasserstein distance:

This is our case of the Kantorovich-Rubinstein duality. It actually holds for other metrics than just the Euclidian metric we used. But the function is suitable to be approximated by a neural network, and this version has the advantage that the Lipschitz continuity can simply be achieved by clamping the weights.

Leave a Comment