-

MindCodec Retweeted

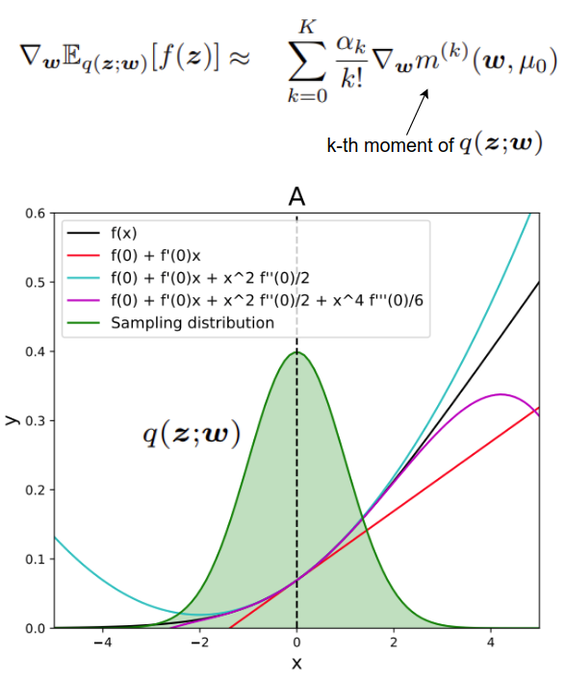

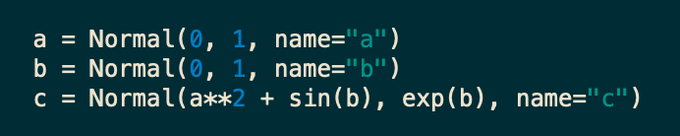

We are currently researching new methods for constructing variational distributions automatically (beyond the standard mean field approach). If you know some literature about this topic please let us know! (And retweet if you have followers who could know!)

-

MindCodec Retweeted

Is there a comprehensive review on the effect of SGD batchsize on generalization?

I've seen papers arguing that large batch GD does not generalise, and also papers that argue the opposite or say the generalisation gap is not all that bad for large-batch SGD. So which one is it? -

MindCodec Retweeted

A new @artcogsys preprint! We present DeepRF: ultrafast population receptive field (pRF) mapping with #deeplearning.

With similar performance in a fraction of the time, it enables modeling of more complex pRF models, resolving an important limitation of the conventional method. https://twitter.com/biorxivpreprint/status/1161307573146005504 -

MindCodec Retweeted

Thrilled to announce that I just accepted an offer from the @DondersInst @AI_Radboud! I will join their faculty as Assistant Professor this fall.

Get in touch if you would like to work with me in the area of (visual) computational neuroscience and machine learning! -

MindCodec Retweeted

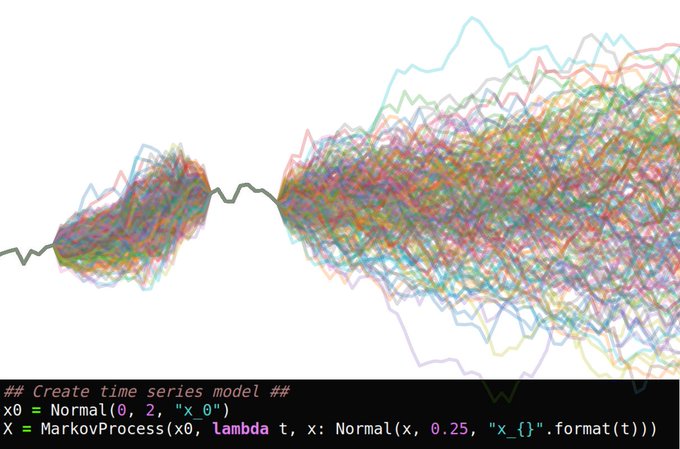

We are happy to share Brancher .35 with dedicated support for stochastic processes, infinite models and timeseries analysis. Try this on our new tutorial! https://colab.research.google.com/drive/1dqc-5DqJOrNw6CR96CDrarrKQ2KSabDH …

If you like our work, please share it and star us on Github! :) https://github.com/AI-DI/Brancher #PyTorch -

MindCodec Retweeted

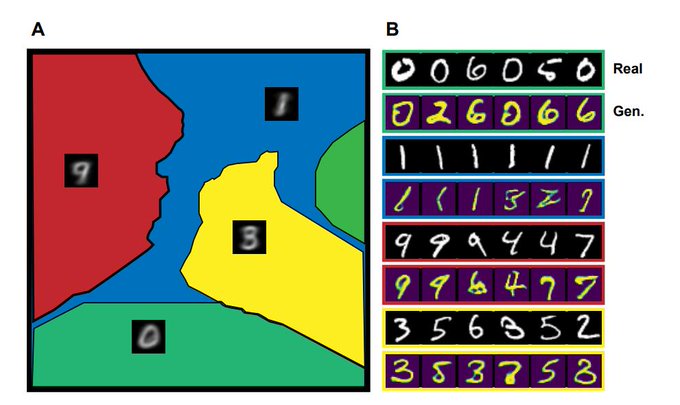

We are happy to share our latest work on generative modeling. k-GANs is a generalization of the well-known k-means algorithm in which every cluster is represented by a generative adversarial network. The mode collapse phenomenon is greatly reduced! #GAN https://arxiv.org/abs/1907.04050

-

MindCodec Retweeted

Coming soon on Brancher: 1) A dedicated interface for stochastic processes and timeseries analysis, 2) automatic construction of variational distributions. https://github.com/AI-DI/Brancher https://brancher.org/ #PyTorch #python

-

MindCodec Retweeted

Check out our new preprint: a large fMRI dataset with ~23 hours of video stimuli (Dr Who)! https://twitter.com/biorxiv_neursci/status/1146083491664269314

-

MindCodec Retweeted

ICYMI (weekly newsletter)

ICYMI (weekly newsletter) New Tools

New Tools

1. @OttomaticaLLC slim

2. @pybrancher

3. Entropic

4. NeuronBlocks by @msdev

5. CJSS by @xsanda

Subscribe http://bit.ly/2PQYBKQ #Docker #Python #CSS #DeepLearning #javascript

http://bit.ly/2PQYBKQ #Docker #Python #CSS #DeepLearning #javascript

-

MindCodec Retweeted

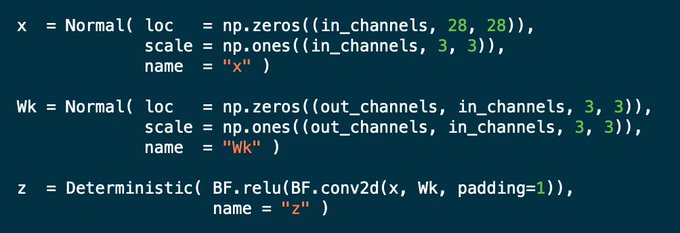

Check out our new probabilistic deep learning tutorial:https://colab.research.google.com/drive/1YNwZpJgrsicK3Pz8igAktbdocm-gA9mV …

You will learn how to build deep probabilistic models using Brancher and how to integrate existing #PyTorch networks in your Brancher models. -

MindCodec Retweeted

this effect is amazingly strong and continues to intrigue... https://twitter.com/DannyDutch/status/1142068351855943683

-

MindCodec Retweeted

Happy to announce that the paper "Computational Resource Demands of a Predictive Bayesian Brain" by @IrisVanRooij and @JohanKwisthout is online now in @CompBrainBeh and freely accessible: https://link.springer.com/article/10.1007%2Fs42113-019-00032-3 … 1/7

-

MindCodec Retweeted

Check GitHub - @pybrancher A user-centered #Python package for differentiable probabilistic inference powered by @PyTorch. Example tutorials: TimeSeries Analysis / autocorrelation, Bayesian inference ... https://github.com/AI-DI/Brancher

-

MindCodec Retweeted

1/3: Thank you for your interest and support! We are soon going to share a roadmap that will outline our plans for future developments. Among other things, we are going to support symbolic/analytic computations and discrete latent variables.

-

MindCodec Retweeted

We are excited to announce the release of Brancher, a #python module for deep probabilistic inference powered by @PyTorch: http://brancher.org

Check out our tutorials in @GoogleColab here: https://brancher.org/#examples https://brancher.org/#tutorials -

Our latest paper on stochastic optimization is out! We use Taylor expansion and a pinch of complex analysis to get low variance gradient estimators for models with either discrete or continuous latent variables. https://arxiv.org/abs/1904.00469

-

MindCodec Retweeted

#ICML2019 highlight (jetlag special): A Contrastive Divergence for Combining Variational Inference and MCMChttps://www.inference.vc/icml-highlight-contrastive-divergence-for-variational-inference-and-mcmc/ …

-

MindCodec Retweeted

StyleGAN trained on abstract art!

Some of the training images are zoomed-in photos/digital art, and others are zoomed-out photos including the picture frame - hence the zooming/matting effect. Also, the data (from Flickr) includes some DeepDream samples, which it picked up on. -

MindCodec Retweeted

Perceptual Straightening of Natural Videos

a short post on a recent paper from Eero Simoncelli's group.https://www.inference.vc/perceptual-streightening-of-natural-videos/ …

There are no more Tweets in this timeline.