Neural Networks for Face Recognition with TensorFlow

Michael Guerzhoy (University of Toronto and LKS-CHART, St. Michael's Hospital, guerzhoy@cs.toronto.edu)

Overview

In this assignment, students build several feedforward neural networks for face recognition using TensorFlow. Students train both shallow and deep networks to classify faces of famous actors. The assignment serves as an introduction to TensorFlow. Visualization of the weights to explain how the networks work is emphasized. Students modify and extend the handout code and write completely new code rather than ``fill in" missing pieces in the handout code. This allows students to experience the development of a machine learning system from scratch, using only code that is similar to what can be found on the internet.

Students train a one-hidden-layer neural network for face classification. Students then improve the performance of their system by build a convolutional network and using transfer learning. Students use the activations of AlexNet pretrained on ImageNet as the features for their face classifier.

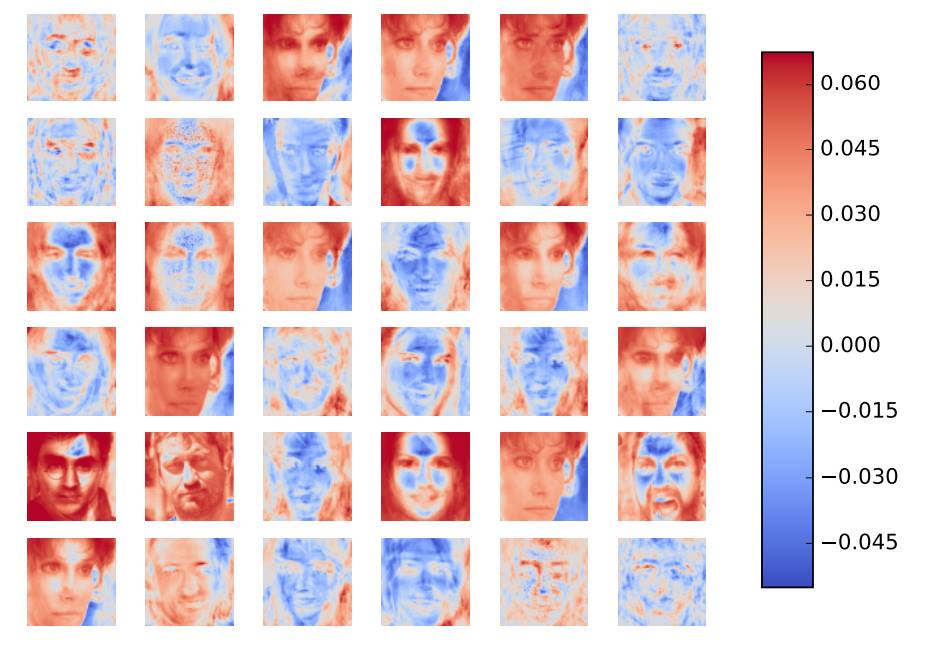

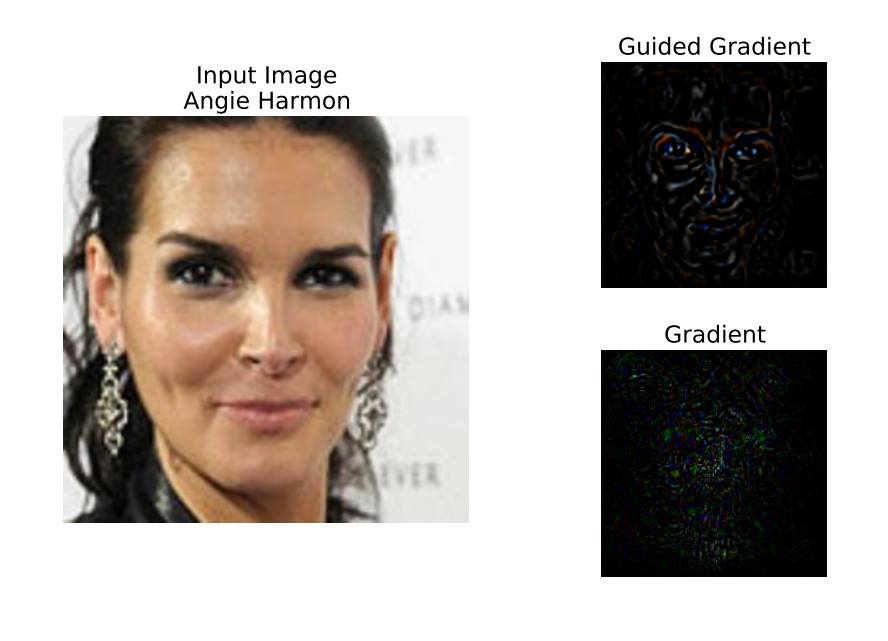

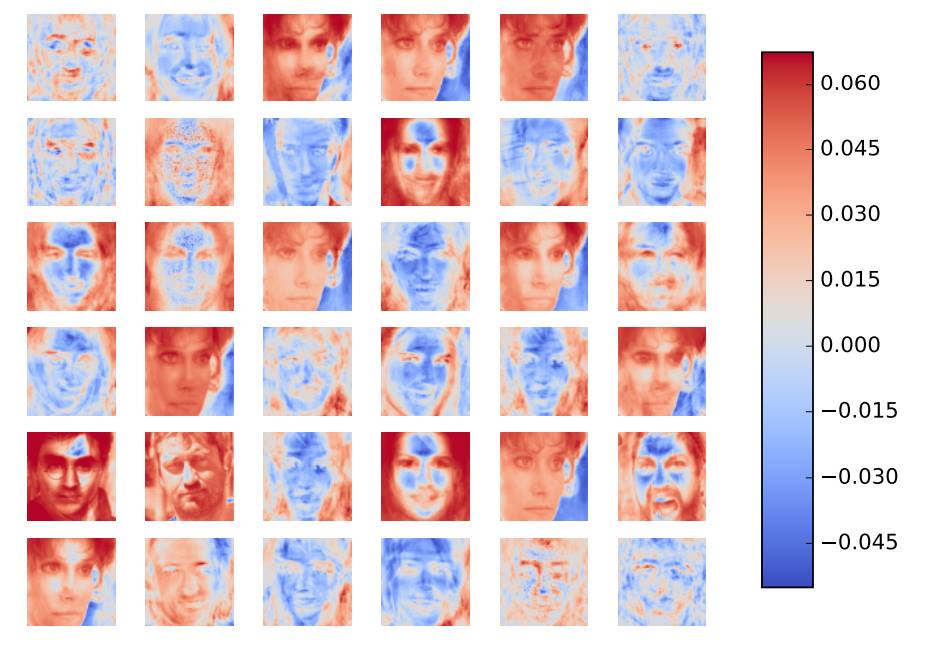

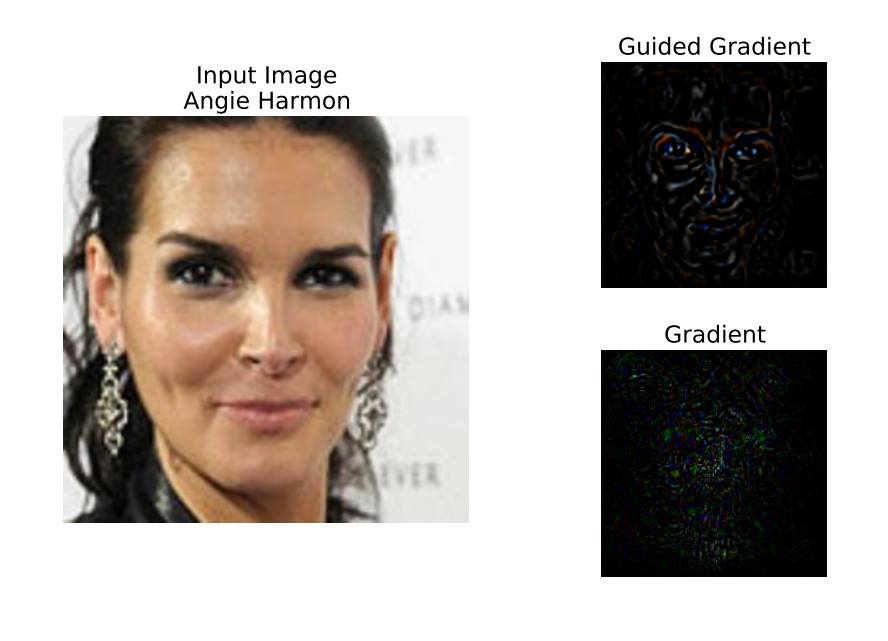

Students visualize the weights of the neural networks they train. Visualizing the weights allows students to understand feedforward one-hidden-layer neural networks in terms of template matching, and allows students to explore overfitting. Students can demonstrate that, with enough hidden units, a one-hidden-layer neural network will ``memorize" faces. Students are asked to explore a visualization strategy of their choice for understanding the convolutional network they train (e.g., Guided Backpropagation).

TensorFlow is introduced by giving students working code that trains a one-hidden-layer neural network on the MNIST dataset. We also provide a beginner-friendly implementation of AlexNet in TensorFlow, along with weights pretrained on ImageNet.

|

|

|

Visualization of the parameters of a single-hidden-layer neural network that sometimes ``memorizes" faces because it contains a very large number of hidden units. Each image is a visualization of the incoming weights of one unit in the hidden layer (credit: Davi Frossard).

|

Guided Backpropagation visualization of the pixels that were the most important for classifying the image above as Angie Harmon (credit: Davi Frossard).

|

|

The assignment can be completed with a single CPU, but students should be encouraged to experiment with cloud services and GPUs.

Meta Information

| Summary |

Students build feedforward neural networks for face recognition using TensorFlow. Students then visualize the weights of the neural networks they train. The visualization allows students to understand feedforward one-hidden layer neural networks in terms of template matching, and allows students to explore overfitting. Using a framework such as TensorFlow allows for the students to be able to run a variety of experiments in order to obtain interesting visualizations.

In the second part of the assignment, students use transfer learning and build a convolutional network to improve the performance of their face recognition system. For bonus marks, students visualize what the convolutional network is doing.

Students work with a bare-bones and comprehensible implementation of AlexNet pretrained on ImageNet, and with a TensorFlow implementation of a neural network that classifies MNIST digits.

|

| Topics |

Feedforward neural networks, face recognition, weight visualization, overfitting, transfer learning, convolutional neural networks.

|

| Audience |

Third and fourth year students in Intro ML classes. Students need to have had practice with making medium-sized (several hundreds of lines of code) programs.

|

| Difficulty |

Students find this assignment quite difficult. Spending more than 10 hours on it is not unusual.

|

| Strengths |

-

Students build their own face recognition system using only code that's similar in spirit to what they might find on the internet. Students clean up their own data, and write their own code (perhaps copying the handout code as appropriate).

-

Students can obtain beautiful visualizations that explain how neural networks can classify faces.

-

Students become familiar with working with TensorFlow. Students have a working image classifier that can be applied to other datasets after completing the course.

-

There are many options for students to extend the project, especially if they are interested in visualization.

-

Student gain hands-on experience with working with AlexNet. Many of our students used the code we provided for their personal and research projects later on.

-

This is an excellent opportunity to get the students to work with cloud services and with GPUs. While the assignment is completely doable on a CPU, it motivates the use of cloud services and GPUs.

|

| Weaknesses |

-

This assignment guides students through building a machine learning system without relying on unmodified handout code. This is beneficial, but makes the assignment quite challenging.

|

| Dependencies |

Students should have a good understanding of feedforward neural networks, including a brief explanation of visualizing the weights of the neural network. Students should have had a brief introduction to ConvNets and transfer learning. Slides are available upong request.

|

| Variants |

- The assignment can be split into two parts.

- We have used the dataset used in this assignment for earlier assignments in our courses: once with k-nearest neighbors and once with linear regression. While the assignment is self-contained, using the same dataset for earlier assignments will make the students' workload lighter and show students the advantages of more sophisticated methods.

- The bonus part is open-ended. Many recent techniques (e.g. Grad-CAM) are accessible to students.

- We intentionally provide handout code that needs to be modified. Instructors may choose to hand out code that runs "out of the box."

- To help prevent plagiarism, we have used different sets of actors in different course offerings.

- Students can try pre-processing the images in various ways to improve performance.

|

Handout

Handout: html (markdown source).

Lessons learned

- Students enjoy the fact that they are creating the system from scratch by copying our code instead of completing it.

- Installing TensorFlow can cause problems both on students' machines and in large computer labs. However, installing the latest Ubuntu version on a VM and installing TensorFlow takes care of the problem.

- The major cloud platform services (AWS, Azure, GPC, etc.) offer generous credits to students, but this sometimes requires an application from the instructor before the course starts. Students benefit from trying to use those, and from using GPUs in the cloud. However, we found that it is impossible to be able to rely on all the students' being able to use those services.

- We found that providing the students with a range of possible performance figures works reasonably well. We recommend against providing the "correct" performance figure.

- Providing reference output images is important. (In particular, it is easy for students to think they computed and displayed the gradient of the output with respect to the image when they haven't done so.) It is also important to emphasize that some variation is to be expected.