CS 154 Homework #4

Getting Set with OpenCV (and Visual Servoing)

Part 1: Set!

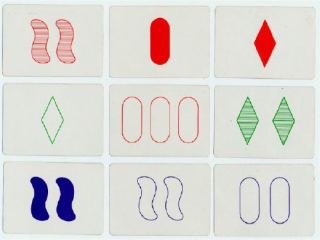

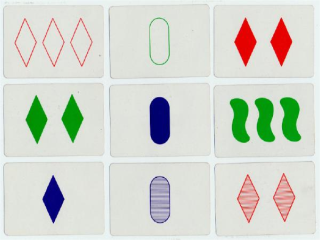

As an application of some of the visual processing discussed in lecture, and by way of introducing the OpenCV vision library, this problem asks each group to implement a program that plays the card game, Set. If you are not familiar with the game, there is an online description of the rules at http://www.setgame.com/set/rules_set.htm. Here are two examples of nine set cards - I believe there is at least one set in each:

What's interesting about the game of set is that people and computers both find part of the game very simple and part of the game quite challenging, but those subtasks are opposites for each group!

People tend to find the identification/labeling of the cards trivial, e.g., "That card has two green filled-in diamonds." However, finding three cards that consitute a legal set from among a collection of randomly-chosen cards can be tricky. In contrast, it is much simpler to create a program to find a set (or all sets) among some labeled cards than it is to actually determine the appropriate label. This problem asks you to write a program that handles both of these tasks. Afterwards, you should be able to play against your computer in the game!

A software framework for Windows/Mac OS X

We will use the OpenCV 2.0 library, which is the best of the vision libraries "out there,"

though that is not to say it has abandoned its researchware roots.

Here is a link to notes that will help get the library set up for your platform:

The Getting Set with OpenCV

wiki page.

Starting software for Set

This link should point to the latest version of the starter code.

The above file is in an archive whose folder contains both a Mac OS X XCode project and a Windows Visual Studio 2008 project. They share the same source code, with the necessary differences #define'd in or out.

- the ability to change between camera and file-based image inputs

- examples of creating and moving windows

- a raw_image window with the original image

- a draw_image window to show annotations atop the raw_image

- a binary_image window with the results of thresholding the raw_image

- a trackbar window with sliders that can change parameters

- examples of mouse interaction and keyboard event handling

- examples of getting and setting individual pixels

- examples of some basic API calls

Here are a few API calls that the starter code does not include, but that you might want to explore to see if they help with Set or other vision processing:

- cvCvtColor which performs many different color conversions, including to HSV space with the flag CV_BGR2HSV

- cvFindContours which extracts region contours from a binary image. The sample programs contours.c and squares.c show examples of how to use contours; the tutorial at Noah Kuntz's Drexel site might help, as well.

- cvWatershed an adaptive segmentation algorithm. There is a watershed.cpp sample file and the OpenCV reference is here.

- cvBlobsLib a library for reasoning about regions from a binary image - its library page is here

Part 2: Project update ~ visual servoing

For the second part of this assignment, each team should update their project's website with additional progress towards your current goal. If you're following the "default" path, then you should expand the finite-state-machine control of your system to include vision and, specifically, a visual servoing task.As a reminder, visual servoing simply refers to the process by which

- You define an error in terms of image quantities, e.g., a difference from a desired size of an image region or a difference from the desired position of an image region.

- You then connect the robot's action with that visual difference, i.e., the robot moves to reduce the difference.

- That process continues until the task is satisfied - for example, until the visual error drops below a threshhold.

Your visual servoing task may use an on-board camera or an off-board camera; it may involve observing the robot directly, or observing landmarks of your own design. It may involve sequential control, e.g., see an arrow and turn until it leaves the field of view, or continuous control, e.g., center on a region of a specific color in order to follow a person or another robot.

In short, the task is up to you -- and up to the problem you're working towards. The key is that for this hwk's update, you should show your system using direct visual control.

Software details

If you are using Tekkotsu (AIBO and Chiaras), then you will likely use this hwk as

an opportunity to learn the vision interface and API that Tekkotsu provides.

If you're using OpenCV (most or all other teams), we have found that making the vision

system a server allows flexible integration of vision into

an existing robot-control system. The finite-state-machine that

executed the Sense-Plan-Act loop will act as a client, connecting to

the vision server and grabbing data from it each time through its SPA (sense-plan-act) loop.

The exact messages passed back and forth between server and client will depend on the data your particular project needs to extract from the video stream. There is already code in both the Mac OS X and Windows versions of the vision code that sets up a socket server. At the moment, the server has only the ability to echo back the client's messags. You will want to alter the message-passing in a way that suits your project. On the robot-control side, you can use the client.py file, below, as a starting point for grabbing that information into your FSM.

The server.py file is provided simply to help prototype and debug your client code.Warning -- note that the way that the vision code's server and the above Python client interact is by having the server always send a reponse message to a client's request. If you use the existing code as a starting point, be sure you maintain this interaction, or you will find the server (and/or client) unresponsive...

What to report on...

In your update, please include

- A description of the activites you've worked on since the last project update.

- Several images of your system, including screentshots that explain the visual processing going on. As always, pictures are also (or especially!) welcome that include the team, passersby, or anything not woking as expected... .

- One or more videos that show your system in action. Or inaction.

- Please attach to the wiki your vision code (just the main.cpp and other additional source files) and your control code (again, only the source files). These can be uploaded separately or together in a zip archive.